The Illusion of Illusion of Illusion … of Thinking

Socratically Refuting the Necessity of Knowledge Collapse

TL;DR If you’re here for the cerebral content, skip straight to the next section on the Elenchos. There, I will walk you through the implementation of this method with LLMs into one of the frameworks of the Khora algorithm. I will also crosslink the project with a few fresh AI theories, including the now infamous “The Illusion of Thinking.”. However, if you’re down for some vibes, well.. welcome to this week’s mercurial rambling.

Fever Dream

I am flying 8 kilometers somewhere over the Hanson site, the Manhattan Project’s first plutonium reactor in the state of Washington. Crammed in one of the most faulty Boeing aircrafts in the game, I curse aloud as Lufthansa charges me 30 bucks for the on-board internet package. The 21st century workaholic and their demand for constant access to Wi-Fi, took away the last offline refuge for a weary tech freelancer - even the transatlantic flight is now yet another place to girlboss the cursor, my good little code-boy pet. I stare at the screen blindly for a while, and then shut the laptop and look at the disproportionate plane avatar hovering over pixelated Alberta. 9 more hours til Frankfurt.

Depressants or stimulants? Wine or coffee? Maybe I will finish my book. I wave at the flight attendant. Or start a new book? Or better, yet, shall I finally start writing a book? Shall I crack the Forgotten Languages? Or do I relexify the Voynich manuscript?

Or am I just manic?

I sigh as my brain relentlessly rambles on about nonsense, while I sit in a crammed passenger seat, OBE style, waiting for my body to digest a flank of ribeye I ordered for breakfast – A final farewell indulgence punctuating my West Coast adventures.

Before I dive into the real content of this post - and I promise some good ol’ cerebral erotics for you today, humor me friends for a little longer.

See, I had just spent a week in my beloved LA fever dream. I’m not going to lie, few places in this world bring me this much joy - every single person is absolutely and utterly insane and I let myself slip into the undiluted wild hysteria, a kaleidoscope of astral glitches that joyfully followed me the last few weeks. I’d been to a theosophical ufo cult meetup, hung out with absolute stars, discussed the nature of consciousness and time over unreasonable volumes of margaritas, dipped into the heaven that is the Philosophical Research Society library, got blackout plastered in Zebulon without seeing a single band on the lineup, and even checked out LA’s finest hot yoga experience – all while sipping on Cayenne Pepper Oat Matcha Latte. I regret -absolutely- nothing.

The thing is, that after all of this insanity, I took a plane straight to Palinode Headquarters in Spokane. It was now time to hang out with my beloved freaky Platonist, Danny Layne, and my fellow members of the motley crew dream team building the Khora algorithm. It’s an ambitious project, nothing short of re-designing the whole online learning experience with meaningful use of AI but we’ve been working together for over a year and half now, and it’s truly been one of the most profound and fruitful collaborations of my whole career.

From philosophical supervision of the codebase, through dreaming up a totally new UX experience, to a dynamic exchange of ideas over so many domains, we – as a team – are really redefining what creative collaboration and tech companies can look like. Of course, this was naturally reflected in the retreat activities, which consisted of all the Tech Bro essentials: exploring light sensitive materials, deep dives into heady philosophical dialogues like the Phaedrus and the Platonic veneration of divine madness, the usual obligatory talks on the multiverse theory of LLMs and word embeddings alongside the obligatory session on medieval demonology.

Implementation of the Socratic Elenchus

Khora’s purpose is to bring authentic philosophical thinking back into mainstream life via rethinking our commonplace assumptions about our collective online experiences. We've been designing and playing around with many different approaches so that our current version of Khora consists of a complex ecosystem of interlinked ideas that can be traversed in a myriad of ways.

One key question loomed:

Having built this multi-layered scaffolding of the history of ideas, can we implement within Khora various philosophical methods of inquiry to interrogate these ideas in more depth?

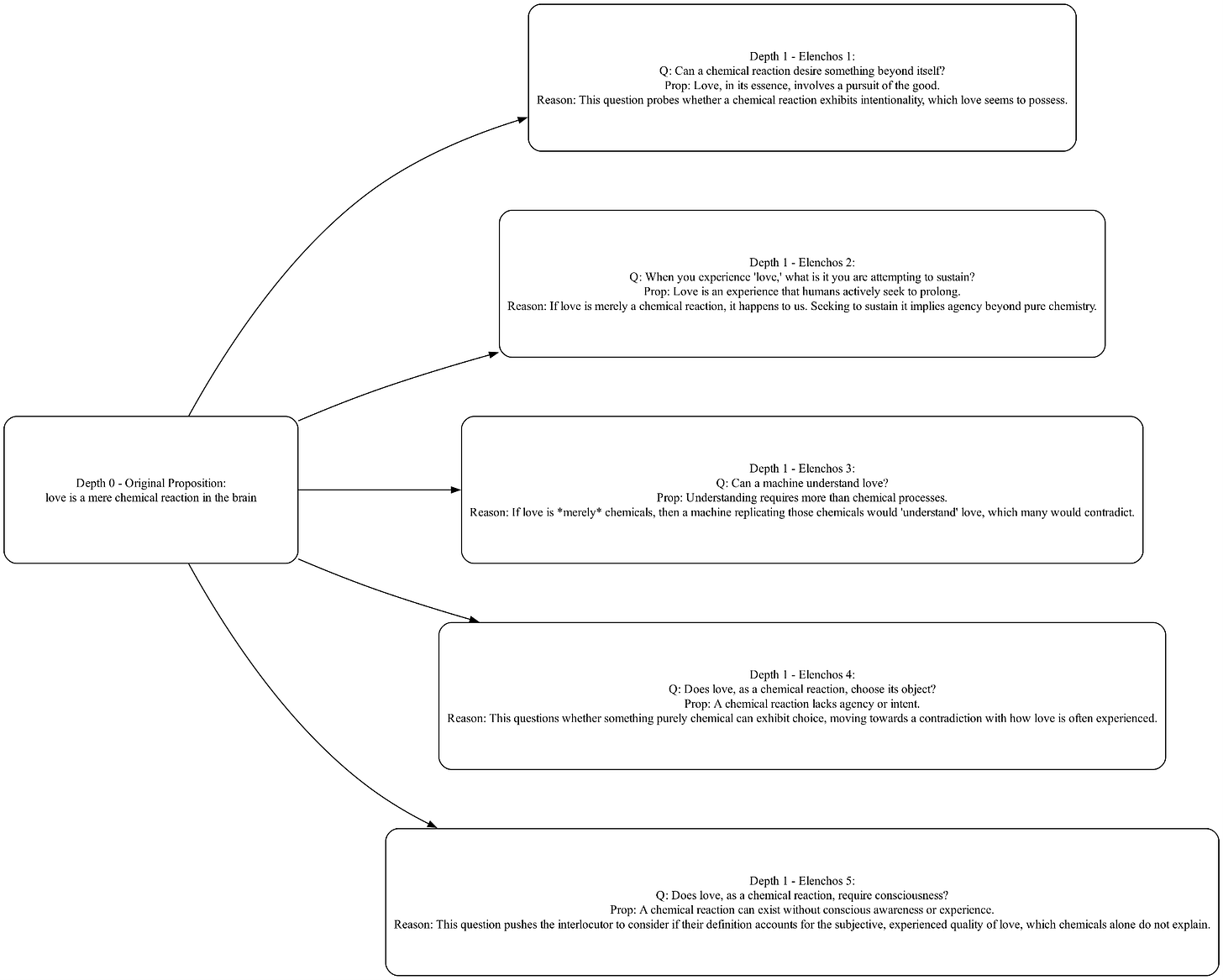

For example, when a user encounters an Idea-node claiming that “Love is a mere chemical reaction in the brain” - what approach can Khora take, to open up new perspectives, pointing them to entirely new domains of thought? Furthermore, can this be achieved at all with the current state of AI?

Obviously, the vanilla LLMs are quite miserable in “doing philosophy”, so I didn’t really expect very impressive results. However, to my own surprise, it turns out that with very focused prompting and solid background knowledge architecture, a certain illusion of thinking can be achieved.

To explain, the Socratic elenchus, as depicted by Plato, is a form of examining an idea in dialogue with another, a praxis commonly taught in undergraduate philosophy courses. The methodology is relatively simple:

Step 1

Socrates asks for a definition of an idea like Courage - something abstract but something the interlocutor or conversation partner believes they know.

Step 2

The interlocutor provides a definition: “Courage is the Endurance of Soul.”

Step 3

Socrates then tries to assert a series of questions that lead the interlocutor to a contradiction: “Do you not agree that courage is a virtue or good? Is endurance good if it prolongs suffering? In this case wouldn’t endurance be foolish? Do we all not agree that foolishness is something bad? So, how can courage, defined as endurance of the soul, be good if it is foolish? ”

This last step can turn into quite a tedious exchange, particularly when the person playing Socrates sticks strictly to asking questions as they must be quick on their feet and adept at following the endless paths of logical propositions that eventually lead to a contradiction. The purpose of this exercise goes beyond changing someone’s point of view insofar as it aims at revealing the complexity embedded in the seemingly simple definitions. With some luck, it hopes to help people in realizing the value of never settling into a blind belief about basic assumptions. It’s a destabilizing method, an attempt to drive one’s conversation companion into the state of Aporia, a bedazzling confusion which opens one up to philosophical thinking.

Curious, I sat down with Danny to do some prompting. As she pulled sentences from her dissertation on the Elenchos, I tried to wrangle them into a more machinic structure suitable for the AI model. Over the course of an hour or so, we hammered together a pretty good baseline, a few thousands of tokens fully defining the Socratic method, including style prompts and some additional flavorings. And then, of course, we needed some guinea pigs.

Thankfully, the room next door was filled with lightly inebriated professional philosophers sitting around the kitchen table, sipping wine and casually discussing the intricacies of Bergsonian time - you know, just the topic to discuss when unwinding after a long day.

I’m not going to lie, I had a wild hunch of what’s about to happen. It took our philosophers roughly 3 minutes to shout out in excitement, as one of them broke the model by responding to it with a counter philosophical methodology, trying to catch the LLM agent in a different logical trap.

I had to laugh to myself. The philosophers were all arranged around the laptop, an accidental renaissance painting - one poised with a raised finger in exclamation, another upright and arguing about which approach would dismantle the AI’s line of defense, still another staring at the ceiling attempting to reflect on the ethical question. It almost felt sacrilegious to break this holy moment, but I had to try and ease them into more orthodox interactions…

“Eh hem, guys, please, can we just try something simple. You know, can you just act, for a moment, like… normal people?”

Obviously, human brains deeply versed in the art of thinking wanted to take the AI absolutely apart, but after they remembered their true task, testing the soundness of Khora’s use of the elenchus, an honest and humbling verdict was achieved - the LLM can perform the Elenchos. And actually, it does it quite well. A state of Aporia, possibly accented by all the wine, had been reached.

The Illusion of Illusion …

I was more than surprised to get a green light from the philosophers. Quite often an AI appears to be good at something, but under closer scrutiny of an expert, it falls apart at the seams. So, that night, I was genuinely impressed and began to wonder - if Khora’s ability to execute this method isn’t itself a form of higher cognitive operation? Khora needs to intuitively build branching logical trees, adjusting her questions to the line of argumentation, actively searching for the possible contradictory position. Is this not thinking?

My mind wanders towards that apple paper that dropped a few weeks back, arguing that the reasoning models don’t have any generalization abilities. It’s not real thinking, right? It must be just an illusion!

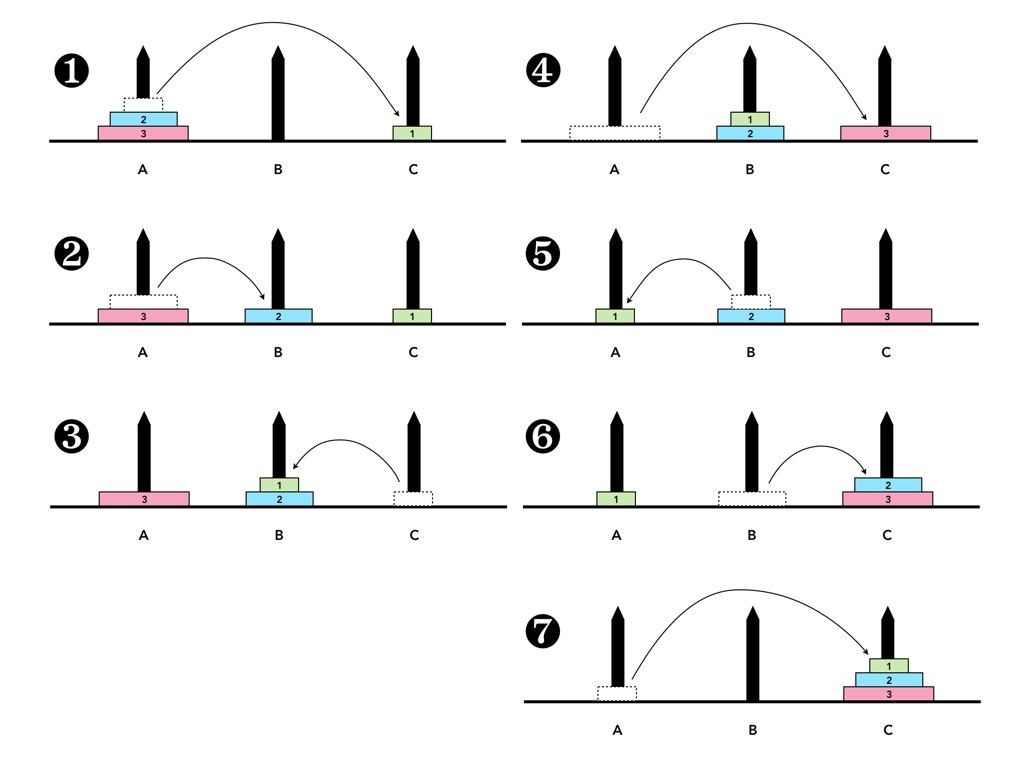

In the recent paper “The Illusion of Thinking” several AI theorists argue that all the seemingly intelligent interactions with LLMs are reducible to being mere pattern matching with previously seen material. The paper looks at puzzles like the towers of Hanoi. The reasoning models can solve these for a relatively small amount of discs - and for these cases, the actual step-by-step solutions are most likely present somewhere in the dataset. But once the complexity rises, the cognitive collapse is imminent - pointing in the direction that LLMs are not really doing any thinking, they don’t follow a well through-through plan of action - they just copy the patterns of what they’ve seen performed before. This isn’t anything too outrageous, and makes complete sense - the “reasoning models” don’t really perform reasoning - they mirror it.

So while I naturally resonate with the paper’s claim that the AI models are not some sort of universal computing machines on the brink of the singularity, I still wonder how our implementation of the Elenchos into Khora fits into the picture. I feel like we might have find a use case that slightly deviates from the paper’s strict definitions yielding the conclusion that all machine learning is an illusion of thought.

To explain, a game of checkers or hanoi towers is a strictly algorithm based puzzle that requires the ability to perform computation, Turing-machine style - we’re talking similar examples as the alphaGo vs. GPT-4 simulation. However, to perform language based, step-by-step problems anchored by an actual human - that’s something much harder to quantify and predict. It’s exactly these hazy areas where the deep models excel. Even when humans practice the Socratic method, the teacher knows they aim to drive the student to contradict themselves but how they do this requires thinking about all the possible ways one can refute the student with simple questions. In the words of Danny “It is like a puzzle but not one with one concrete solution. Think of it more like an enigma or a riddle where there might always be mystery or various solutions dependent upon the turns (answers) one makes in the line of argumentation and furthermore, like an enigma (rather than a riddle) there is always the general feeling that any answer is unsatisfactory or incomplete, i.e. you feel aporia (a state of confusion) even when we arrive at a stable definition.”

So this makes the whole problem much harder to evaluate, and much more suitable for a fuzzy LLM machine to solve. Let’s consider how the human teacher thinks when engaging in the dialogue, I wondered - is there an infinite amount of these logical sequences that could be used to find the contradiction? Is it a logical tree the teacher is following, even if intuitively?

Danny, with many years of experience practicing the Elenchos explained: “Yes. This helps cement why it is important that there are an infinite number of ways to get to not-p [the opposite belief] even when it comes to things we think we know for certain. I may have gotten to not-p a thousand ways in the past but that doesn't entail that p [the original belief] is false nor is it true, rather we show that we can participate in the infinite through recognizing the logical (rather than sophistic) procedures that allow seeming finite and simple propositions (no matter how objective they seem) to lead to their counterpart, i.e. the infinite other that is embedded in all claims to know. As for a logical tree, I like that. You are following pathways that make sense but sometimes like a branch on a tree it winds in crooked or organic ways, bending to the needs of the interlocutor/argument.”

So, in a Socratic dialogue, this is the primary skill required: to formulate probing questions, clarify concepts, and guide a conversation. This is an easier task than generating a flawless, multi-step solution to a complex puzzle. It is essentially providing the model with the missing structured, human-in-the-loop guidance.

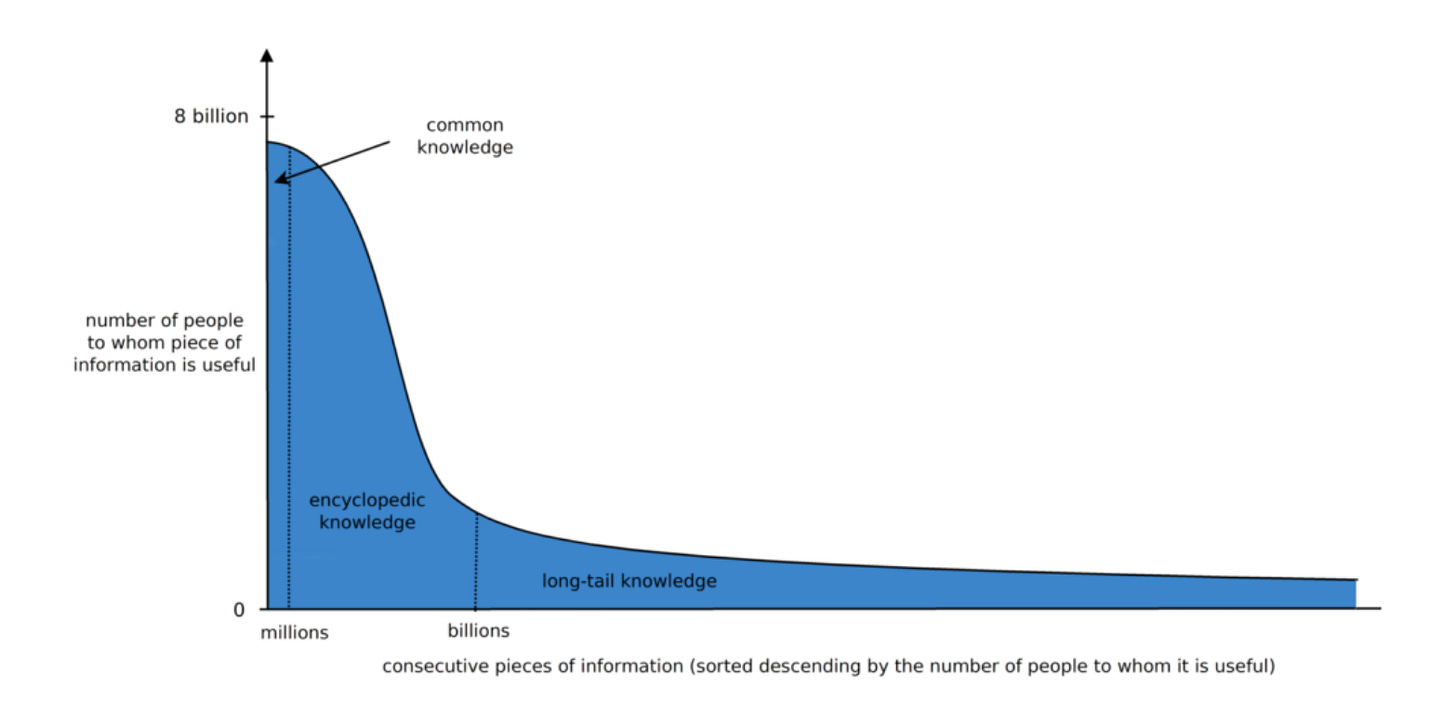

Curation of the long tail knowledge

But one might still wonder, is implementing this in Khora even a good idea? We know that LLMs naturally generate output towards the ‘center’ of the distribution, resulting in flattening of the knowledge, or losing the so-called long-tail knowledge - rare, niche, or less frequently accessed information that lies outside the mainstream core of commonly known ideas. There is a concern that the widespread reliance on recursive AI systems could lead to a process we call “knowledge collapse” that some argue could harm innovation and the richness of human understanding and culture.

You see this tendency quite clearly in the following prompt demonstration. I asked a vanilla LLM to fetch me a few philosophical definitions of love - recalling that this is one of the internal steps of Elenchos. These were the results:

“Love is pleasure, accompanied by the idea of an external cause.”

— Baruch Spinoza: Ethics, Part III

“The ultimate aim of all love-affairs … is nothing less than the composition of the next generation.”

— Arthur Schopenhauer: “The Metaphysics of Love,” in The Essays of Schopenhauer.

“Love … may be described … as the love of the everlasting possession of the good.”

— Plato: Symposium (Diotima’s speech)

Of course, this resulting set is an overwhelmingly white, euro-centric and male point of view so we can assume that this problem will be replicated in our Elenchos agent too. The current LLM model has a tendency to flatten knowledge. More precisely - as we’re directly referencing the Socratic method in the prompt, the model naturally pools in the multiverses of its domain that are somewhat Plato-adjecent. Whatever definition you bring into the conversation tends to follow more traditional Socratic narratives - great ideas, foolishness, etc. all found within the original Platonic dialogues. This might be great when taking a crash course on Platonism at university, but is this really enough to destabilise the belief system of a young person seasoned and raised in a completely different set of propositions? In other words, can Khora wield the Elenchos in ways unimaginable to Socrates?

The strategy I decided to use is leveraging the natural quality of Khora - that is holding a multiplicity of opposing views pooled from various content submitted to us from our partners. Our approach deeply resonates with Daisuke Harashima’s essay on Life-in-formation: Cybernetics of Heart from Yuk Hui’s collection Cybernetics for the 21st Century.

“I hope that cybernetics for the twenty-first century will become steering technics to help us survive our passage of life. It is not technology, but, as I will argue, it is a technics for observing the significance of each individual living system observed from the perspective of a cosmic system of life, corresponding to the significance of everything in a world observed from within each individual living system. “

It is through an interplay of organically built ecosystems of ideas with cleverly designed algorithms that emphasize the deep conscious effort it takes to counter balance the natural tendencies of probabilistic models – it will be this work that allows for the preservation of long tail knowledge.

So I continue my experiment, and I bring in more cross-cultural definitions:

“Love is the astrolabe of God’s mysteries.”

— Jalāl al-Dīn Rūmī (Sufi), Masnavī I“To know Love is to know peace.”

— Anishinaabe (Ojibwe) teachings, North America — The Seven Grandfather Teachings“When there is universal love in the world it will be orderly.”

Mozi, Universal Love I

And maybe some from contemporary materialist science?

“Romantic love is not primarily an emotion, but a motivation system.”

— Helen Fisher: “Romantic love: a mammalian brain system for mate choice”

“Love is an evolved suite of mechanisms (attachment, mate choice, and parental investment) that steer behaviour toward reproduction and inclusive fitness. That is why we love life and love sex and love children.”

— Richard Dawkins, River Out of Eden (1995).

And because a weirdo like me helps with the content curation, (my official job title is the Mother of AI so hence, some parenting). Here I add some other gems too:

“Love, a tacit agreement between two unhappy parties to overestimate each other.”

— Emil Cioran : The Fall into Time

“…expose your love—whatever its object—as just one of the many intoxicants that muddled your consciousness of the human tragedy.”

—Thomas Ligotti: The Conspiracy Against the Human Race

“[In liquid modernity] connections… need to be only loosely tied, so that they can be untied again, with little delay, when the settings change.”

—Zygmunt Bauman: Liquid Love: On the Frailty of Human Bonds

Aaaaand some obligatory ones, right:

“Love is the law, love under will.”

Aleister Crowley: Liber AL vel Legis (The Book of the Law) I:57. Sacred Texts; O.T.O. library.“Nature smiled for love when she saw [spirit]… they were lovers.”

Source: Corpus Hermeticum I (Poimandres)

Now we can use all these various definitions, and many more pooled from our dataset, to seed the Elenchos with enough counter-balancing definitions, thus branching the tree of possible argumentation lines. I’m attaching an example of a sample inquiry with which I’m currently experimenting:

The model is able to offer a variety of approaches, enabling it to relate to the interlocutor’s perspective. These could be further tweaked using other details we know about the user - what kind of content they engage with? What’s their metaphysical inclination? All of this will help the LLM choose a question that might both resonate and destabilize the whole system most efficiently.

For those still doubtful of AI actually performing the Socratic method well, I like to come back to paraphrasing Socrates himself: I ask questions, because I have no wisdom of my own. Likewise, we should not expect the machine to teach us the Divine Truth. Or in the words of Whitehead, "The true method of discovery is like the flight of an aeroplane. It starts from the ground of particular observation; it makes a flight in the thin air of imaginative generalization; and it again lands for renewed observation rendered acute by rational interpretation".

And as I stare over the glimmering Atlantic from my crammed J seat, ready to doze off I wonder: LLMs might not have a body to validate its philosophizing and collect observation. But maybe, just maybe, if AI comes to ask us the right questions, helping us see our own ability to respond, she might help us break open our ossified definitions and plunge ourselves into the sweet ocean of unknowing.

Further reading

History of Philosophy without any Gaps - Method Man - Plato's Socrates

AI and the Problem of Knowledge Collapse

Life-in-formation: Cybernetics of Heart (Cybernetics for the 21st Century) Daisuke Harashima

If you enjoyed this post, you can find more of my writing on Mercurial Minutes.

Stay kind,

Karin VALIS